We need a different approach to supervisory stress-testing

Confusing processes turn tests into template-filling exercise, says Garp’s Jo Paisley

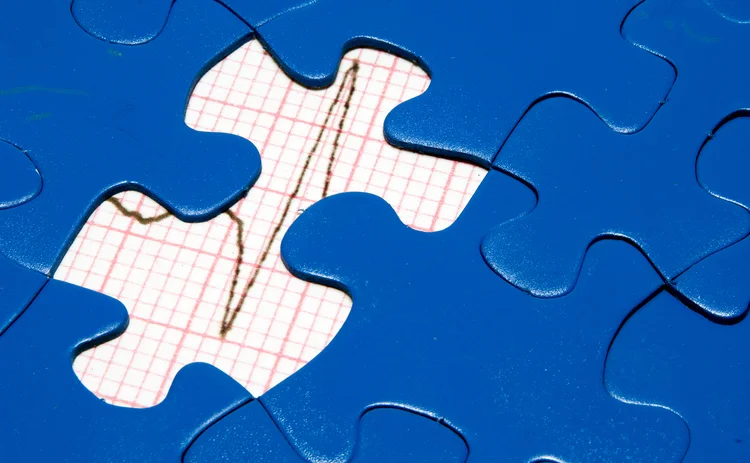

If you Google ‘stress test’, you’ll most likely see a person on a treadmill, hooked up to electrodes, with an ECG monitoring his or her heart. A stress test for a financial institution is similar: it puts the firm under strain and tests whether it is healthy enough to cope.

From a risk management point of view, stress-testing is a good thing. It’s forward-looking and provides insights into the

Only users who have a paid subscription or are part of a corporate subscription are able to print or copy content.

To access these options, along with all other subscription benefits, please contact info@risk.net or view our subscription options here: http://subscriptions.risk.net/subscribe

You are currently unable to print this content. Please contact info@risk.net to find out more.

You are currently unable to copy this content. Please contact info@risk.net to find out more.

Copyright Infopro Digital Limited. All rights reserved.

As outlined in our terms and conditions, https://www.infopro-digital.com/terms-and-conditions/subscriptions/ (point 2.4), printing is limited to a single copy.

If you would like to purchase additional rights please email info@risk.net

Copyright Infopro Digital Limited. All rights reserved.

You may share this content using our article tools. As outlined in our terms and conditions, https://www.infopro-digital.com/terms-and-conditions/subscriptions/ (clause 2.4), an Authorised User may only make one copy of the materials for their own personal use. You must also comply with the restrictions in clause 2.5.

If you would like to purchase additional rights please email info@risk.net

More on Comment

Op risk data: FIS pays the price for Worldpay synergy slip-up

Also: Liberty Mutual rings up record age bias case; Nationwide’s fraud failings. Data by ORX News

What the Tokyo data cornucopia reveals about market impact

New research confirms universality of one of the most non-intuitive concepts in quant finance

Allocating financing costs: centralised vs decentralised treasury

Centralisation can boost efficiency when coupled with an effective pricing and attribution framework

Collateral velocity is disappearing behind a digital curtain

Dealers may welcome digital-era rewiring to free up collateral movement, but tokenisation will obscure metrics

Does crypto really need T+0 for everything?

Instant settlement brings its own risks but doesn’t need to be the default, writes BridgePort’s Soriano

October’s crash shows crypto has come of age

Ability to absorb $19bn liquidation event marks a turning point in market’s maturity, says LMAX Group's Jenna Wright

Responsible AI is about payoffs as much as principles

How one firm cut loan processing times and improved fraud detection without compromising on governance

Op risk data: Low latency, high cost for NSE

Also: Brahmbhatt fraud hits BlackRock, JP Morgan slow to shop dubious deals. Data by ORX News