This article was paid for by a contributing third party.More Information.

Benchmarking deep neural networks for low-latency trading and rapid backtesting

Faster, more powerful graphics processing units (GPUs) have the potential to transform algorithmic trading and offer a credible alternative to more expensive devices, explain Martin Marciniszyn Mehringer, Florent Duguet and Maximilian Baust from Nvidia’s technology engineering team

Lowering response times to new market events is a driving force in algorithmic trading. Latency-sensitive trading firms keep up with the ever-increasing pace of financial electronic markets by deploying low-level hardware devices, such as field programmable gate arrays (FPGAs) and application-specific integrated circuits (Asics), into their systems.

As markets become increasingly efficient, traders need to rely on more powerful models such as deep neural networks (DNNs) to improve profitability. As the implementation of such complex models on low-level hardware devices requires substantial investment, general-purpose GPUs present a viable, cost-effective alternative to FPGAs and Asics.

Nvidia has demonstrated in the Securities Technology Analysis Center’s machine learning inference benchmark – known as Stac-ML1 – that the Nvidia A100 Tensor Core GPU can run long short-term memory (LSTM) model inference consistently with low latencies. This shows that GPUs can replace or complement less-versatile low-level hardware devices in modern trading environments.

Stac-ML inference benchmark results

DNNs with LSTM are an established tool for time-series forecasting. They are also applied in modern finance. The Stac-ML inference benchmark is designed to measure the latency of LSTM model inference. This is defined as the time from receiving new input information until the model output is computed.

The benchmark defines the following three LSTM models of varying complexity: LSTM_A, LSTM_B and LSTM_C. Each model has a unique combination of features, timesteps, layers and units per layer. LSTM_B is roughly six times greater than LSTM_A, and LSTM_C is roughly two times greater.

There are two separate benchmark suites: Tacana and Sumaco. Tacana suite is for inference performed on a sliding window, where a new timestep is added and the oldest removed for each inference operation. In Sumaco suite, each inference is performed on an entirely new set of data.

Low-latency optimised results for the Tacana suite

Nvidia demonstrated the following latencies (99th percentile) on a Supermicro Ultra SuperServer SYS-620U-TNR with a single Nvidia A100 80GB PCIe Tensor Core GPU in FP32 precision (SUT ID NVDA221118b):

- LSTM_A: 35.2 microseconds2

- LSTM_B: 68.5 microseconds3

- LSTM_C: 640 microseconds4

These numbers relate to running inference on one model instance. It is also possible to deploy ensembles of independent model instances on a single GPU. For 16 independent model instances, the corresponding latencies are:

- LSTM_A: 54.1 microseconds5

- LSTM_B: 140 microseconds6

- LSTM_C: 748 microseconds7

Moreover, there were no large outliers in latency. The maximum latency was no more than 2.3 times the median latency across all LSTMs, even when the number of concurrent model instances was increased to 32.8 Having such predictable performance is crucial for low-latency environments in finance, where extreme outliers may result in substantial losses during fast market moves.

Nvidia is the first vendor to submit numbers for the Tacana suite of the benchmark. In contrast to the Sumaco suite, the Tacana benchmark allows sliding window optimisations, which facilitate exploiting the stream-like nature of time-series data. Previous submissions for the Sumaco suite of the Stac-ML benchmark claimed latency figures within the same order of magnitude.

High-throughput optimised results for the Sumaco suite

Nvidia also submitted a throughput-optimised configuration on the same hardware for the Sumaco suite in FP16 precision (NVDA221118a):

- LSTM_A: 1.629 to 1.707 million inferences per second at a power consumption of 949 watts9

- LSTM_B: exceeded 190 thousand inferences per second at a power consumption of 927 watts10

- LSTM_C: 12.8 thousand inferences per second at a power consumption of 722 watts11

These figures confirm that Nvidia GPUs are exceptional in terms of throughput and energy efficiency for workloads such as backtesting and simulation.

Impact on automated trading

Why do microseconds – a timespan in which light can only travel 300 metres – even matter in automated trading? Mature electronic markets disseminate new information at high speed. Trading applications that rely on complex neural networks such as LSTMs run the risk that model inference takes too long.

To place inference latencies in a high-frequency trading context, Nvidia analysed the time intervals between market trades in one of the most actively traded financial contracts in Europe: the Euro Stoxx 50 Index futures.12

The high-precision timestamp dataset comprises nanosecond tick data corresponding to trades on new price levels during a one-month period (October 2022). The analysis counted how often the time difference between two consecutive trades was less than the previously noted latencies. The resulting estimates on the frequency of events queuing in the inference engine were as follows:

- LSTM_A: 0.14% occurrences

- LSTM_B: 0.58% occurrences

- LSTM_C: 8.52% occurrences

As shown, Nvidia GPUs enable electronic trading applications to run inference in real time on very large LSTM models serving some of today’s fastest-moving markets. A model as complex as LSTM_B achieves an extraordinarily low queuing frequency of 0.58%. Even for the most complex model, LSTM_C, with a queuing frequency of 8.52%, the Nvidia submission delivers a state-of-the-art inference latency below one millisecond.

Advantages of Nvidia GPUs for electronic trading

Nvidia GPUs provide numerous benefits that help lower the total cost of ownership of an electronic trading stack, as detailed below.

Training and deployment platform

Regardless of whether you need to develop, backtest or deploy an artificial intelligence (AI) model, Nvidia GPUs deliver performance without forcing developers to learn different programming languages and programming models for research and trading. All Nvidia GPUs speak Nvidia’s Compute Unified Device Architecture (Cuda) computer platform language and can therefore be programmed in the same way, regardless of whether you are using the respective device in a development workstation or a data centre.

In addition, Nvidia Nsight tools consist of a collection of powerful developer resources to debug and profile applications, improving their performance. In many cases, it is not even necessary to learn Cuda. Modern machine learning frameworks such as PyTorch expose performance-relevant features, such as Cuda Graphs and Cuda streams, and offer sophisticated profiling capabilities.

Performance improvements

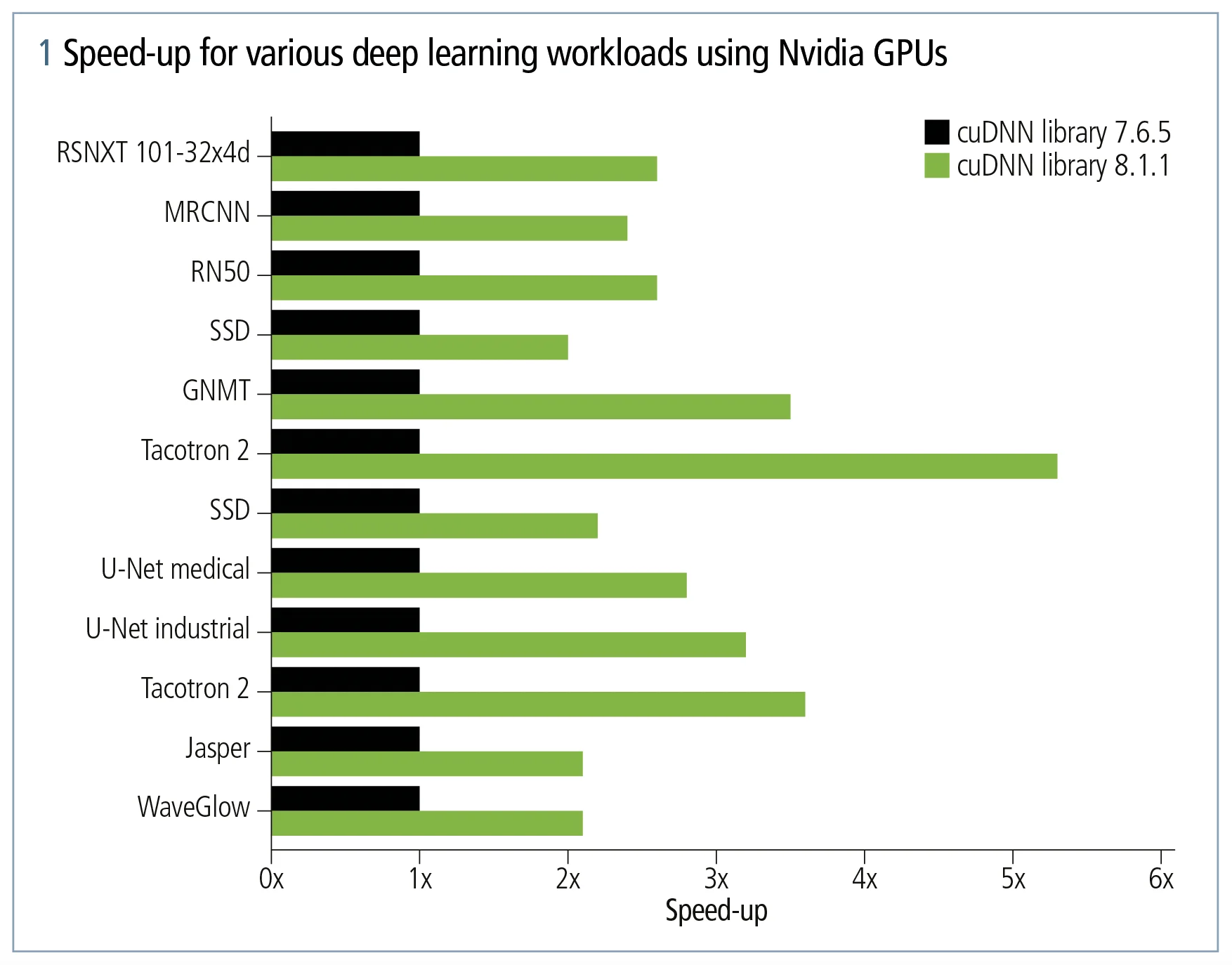

Nvidia is continuously improving the performance of its core libraries, such as cuBlas, for accelerating basic linear algebra subroutines, Cutlass for high-performance general matrix multiplications or cuDNN for accelerating DNN primitives. All of these libraries facilitate flexible performance tuning or even provide auto-tuning capabilities to select the best primitives for a given combination of GPU and application. For this reason, a stack for AI applications based on Nvidia GPUs becomes even faster throughout its lifetime.

High-compute density

Efficient use of space in data centres is essential. With even a single Nvidia A100 Tensor Core GPU installed in a server, it is possible to achieve the following space efficiency numbers:

- LSTM_A: 666,621–694,874 inferences per second per cubic foot13

- LSTM_B: 77,714–77,801 inferences per second per cubic foot14

- LSTM_C: 5,212 inferences per second per cubic foot15

These numbers are taken from the report on the throughput-optimised configuration (SUT ID NVDA221118a), not the latency-optimised equivalent. The supermicro server is Nvidia-certified for up to four Nvidia A100 GPUs, which would increase the compute density accordingly.

Large ecosystem and developer community

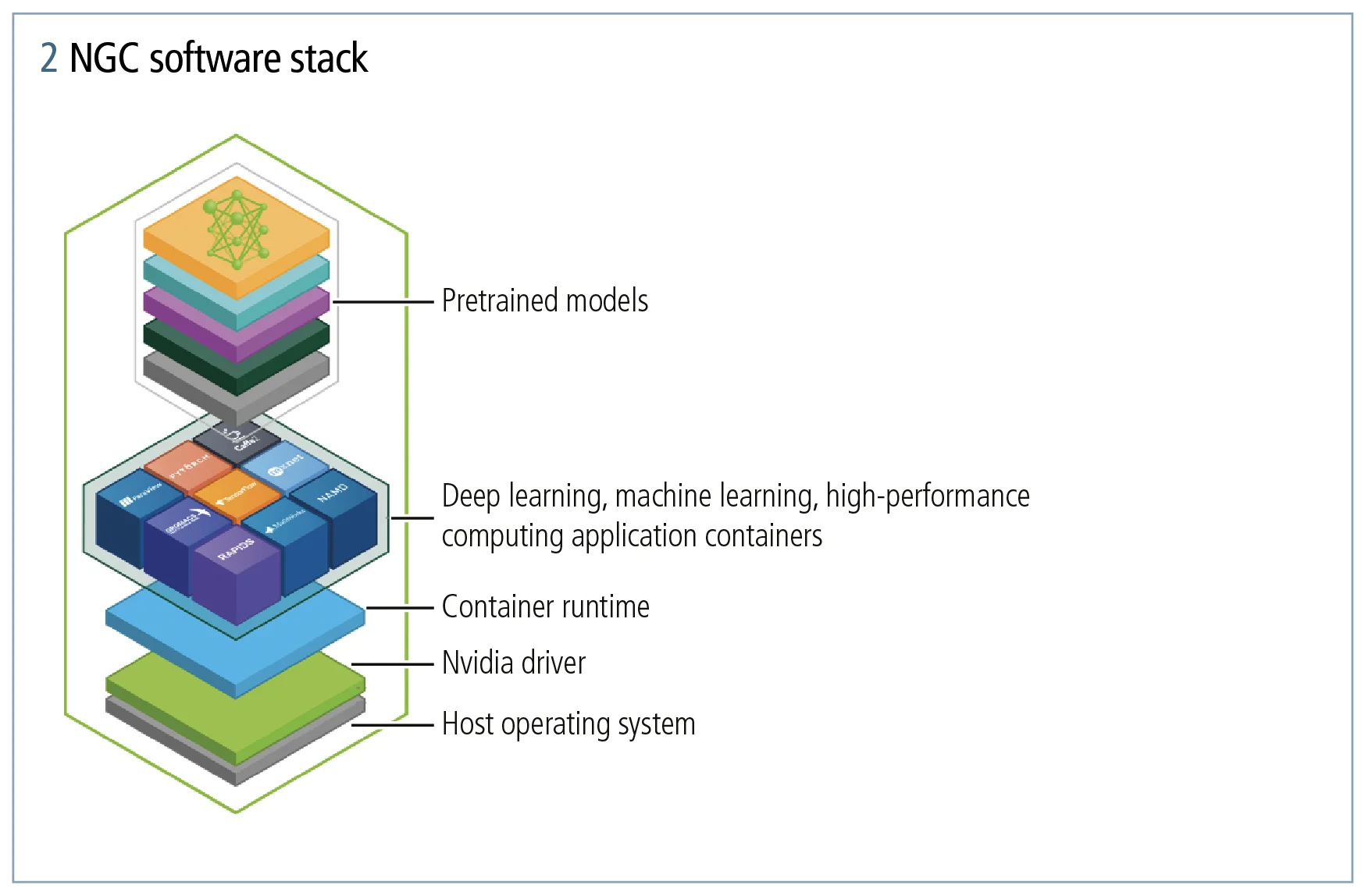

Nvidia GPUs support many deep learning frameworks, such as PyTorch, TensorFlow or mxNet, which are used by data scientists and quantitative researchers worldwide. To ease the pain of dependency management, all frameworks are shipped as container images comprising the latest version of our libraries. This lowers the burden of setting up development environments and ensures reproducibility of results. These container images can be obtained easily through Nvidia GPU Cloud (NGC), which offers fully-managed cloud services as well as a catalogue of GPU-optimised AI software and pretrained models.

Summary

The results achieved in the Stac-ML inference benchmark demonstrate the added value of GPUs in standalone and complementary low-latency environments. With quant research and trading development conducted on the same platform, time to production can be significantly reduced. A single hardware target lifts the burden of having to maintain several implementations for distinct platforms. This paradigm shift – the consolidation of the development stack between research and trading – is a key advantage of the Nvidia accelerated computing platform.

Notes

1. Stac and all Stac names are trademarks or registered trademarks of the Securities Technology Analysis Center

2. Stac-ML.Markets.Inf.T.LSTM_A.1.LAT.v1

3. Stac-ML.Markets.Inf.T.LSTM_B.1.LAT.v1

4. Stac-ML.Markets.Inf.T.LSTM_C.1.LAT.v1

5. Stac-ML.Markets.Inf.T.LSTM_A.16.LAT.v1

6. Stac-ML.Markets.Inf.T.LSTM_B.16.LAT.v1

7. Stac-ML.Markets.Inf.T.LSTM_C.16.LAT.v1

8. Stac-ML.Markets.Inf.T.LSTM_A.2.LAT.v1

9. Stac-ML.Markets.Inf.S.LSTM_A.[1,2,4].TPUT.v1

10. Stac-ML.Markets.Inf.S.LSTM_B.[1,2,4].TPUT.v1

11. Stac-ML.Markets.Inf.S.LSTM_C.[1,2,4].TPUT.v1

12. Data courtesy of Deutsche Börse

13. Stac-ML.Markets.Inf.S.LSTM_A.[1,2,4].SPACE_EFF.v1

14. Stac-ML.Markets.Inf.S.LSTM_B.[1,2,4].SPACE_EFF.v1

15. Stac-ML.Markets.Inf.S.LSTM_C.[1,2,4].SPACE_EFF.v1

Sponsored content

Copyright Infopro Digital Limited. All rights reserved.

As outlined in our terms and conditions, https://www.infopro-digital.com/terms-and-conditions/subscriptions/ (point 2.4), printing is limited to a single copy.

If you would like to purchase additional rights please email info@risk.net

Copyright Infopro Digital Limited. All rights reserved.

You may share this content using our article tools. As outlined in our terms and conditions, https://www.infopro-digital.com/terms-and-conditions/subscriptions/ (clause 2.4), an Authorised User may only make one copy of the materials for their own personal use. You must also comply with the restrictions in clause 2.5.

If you would like to purchase additional rights please email info@risk.net