Integrated data management for commodities

Managing market data for risk and valuation is a universal challenge for institutions. At the basic level, data captured from different sources must be standardised, assessed, integrated and passed through quality-control processes to create a clean set of prices for daily mark-to-market and risk management. Reference data - whether it is a legal hierarchy, a reporting hierarchy, terms and conditions of new instruments, definitions of product grades or customer profiles - must be accurate and up to date. Data such as variance-covariance matrices, volatility skews or zero curve data must undergo a thorough quality assurance (QA) process. This is especially true for commodity data, given its volatile nature, the frequent combination of external data with internal assessments and the integration of commodity data with other 'financial' asset classes in an overall risk management framework.

Asset Control has been supplying leading financial institutions with solutions for data management for nearly two decades. Asset Control's software suite includes coverage of price data from tick-by-tick to end-of-day, counterparty data, corporate actions and security master data. It offers specially configured solutions to address specific challenges in data management. These include:

- AC PriceMaster - for the management of price data. For clean end-of-day data, risk factors and consolidated prices.

- AC CompanyMaster - for the management of legal entity and credit risk data including ratings, issuer data, credit derivatives and internal information.

- AC SecurityMaster - 'golden copy' reference data on instruments and issuers.

- AC Corporate Actions - for consolidating corporate action data and monitoring its lifecycle.

Although this article will focus on commodities prices and curves, the data methodology discussed holds for all asset types and is applied across middle office, back offices of banks, investment managers, hedge funds, energy firms and clearing houses. Below, we will discuss some of the unique data management aspects of commodities and why a structured approach to data quality makes sense. The main drivers include: burgeoning volumes; the recognition of commodities as an interesting asset class by the mainstream investment world; the introduction of new asset types such as the recent 'compliance' market in carbon; new exchanges; and the subsequent proliferation of content providers in this space.

Market landscape and development

Over the past several years - coinciding with a boom in prices - commodities have rapidly become an integrated product line for many sell-side institutions and a more ordinary asset class to invest in for the buy-side. More and more products have been offered by investment banks or have become listed on exchanges, which have trended towards screen-based trading. Central counterparty models have also boosted market liquidity. New primary products have been created as power exchanges are adding emissions to their portfolio of products, and emissions are now part of the overall energy portfolio.

Because investment managers have added commodities to diversify, the proportion of fund holdings in commodities is expected to top 10% in the near future. Many hedge funds have been extending their trading platforms to energy commodities, seeking higher returns, as spreads in many regions and products are attractive. Banks are offering power-based or emission-based risk products and more hybrid/structured products - that is, products linked to indices such as the Goldman Sachs Commodity Index - can be expected from the banks for institutional investors and retail individuals. These developments have led to the fact that commodities are no longer fundamentally different from other products in trading. The main system providers in risk and portfolio management have commodities now fully integrated in their product lines, which means that the data supply to these systems should keep pace.

Data challenges

From a data management perspective, commodities pose some unique challenges.

- First, energy commodities are among the most volatile products ever created. This means that data quality has a different dimension here and that access to accurate and independently verified price and curve data is essential.

- Second, it is necessary to show explicit links and correlations between different products and to look at the whole group within a commodity complex. For example, there is a large set of grades of oil, only a couple of which have a forward market (for example, West Texas Intermediate (WTI) and Brent Crude). Often, you need to proxy the physical with one of these grades or you have a product sitting between two other products (for example, ethanol between oil and sugar).

- Third, because the long end of a curve is often very illiquid, the bid-offer spread and illiquidity of longer-dated contracts should be included into measures of value-at-risk (VAR) and stress testing. Also, high transaction costs to rollover futures positions or to hedge longer-dated exposures need to be accounted for in the risk measure.

- Fourth, commodity prices are exposed to a very large set of risk factors that combine such diverse items as:

- event risk;

- cash flow risk;

- basis risk;

- legal/regulatory risk;

- operational risk;

- tax risk; and

- geopolitical/macro and weather risk.

The political situation often determines the relative rarity and supply risks that impact the price. More data, including macro-economic data, will need to be integrated to get to the full picture. Also, some commodity prices are correlated with the business cycle and some are not.

- Finally, because of the enormous variety of grades and the developments in structured products, a flexible static data model is essential to cater for descriptions of all current and future product varieties. Required data attributes will also vary by jurisdiction and company type. In the case of utilities, for example, it is necessary to include tax reliefs applicable to the use of biomass fuels and the costs of emissions (for example, the use of coal versus the use of natural gas).

The forward curve - getting the basics right

The forward curve is the foundation of any commodity product pricing and, therefore, a core ingredient to any rates system. However sophisticated your derivative pricing model may be, if the forward curve that is put into the calculation is inaccurate, the resulting errors will overshadow any value the complex pricing model has to offer. A common error in a valuation process is to focus on pricing exotic products, while the foundation of the whole process - the forward price curves that affect the valuation of the whole portfolio - is inaccurate to begin with.

There are several reasons why commodity forward curves are becoming part of a standard set of risk factors in larger banks:

- As many banks have established or expanded their commodities trading desks, or have expanded their business to new markets, their exposure to market risks needs to be brought under the overall VAR umbrella.

- The EU's forthcoming Markets in Financial Instruments Directive (Mifid), due to become effective November 2007, also covers commodities and commodity directives. This means that reporting requirements, which need unique instrument identification, and trading venue comparison will also come into play here.

- To some extent, new players have increased volatility and increased the need for more proactive energy risk management. Whereas, in the past, market analysis was based more on fundamentals, the traditional balance between speculators and producers/consumers has disappeared, and investors sometimes account for 75% of traded volume; therefore they can distort the market and trade against the fundamentals.

- For corporates, recent accounting developments such as FAS133 stipulate that derivates need to be revalued on a regular basis, even if employed by an end-user such as a power producer, an airline or shipping company. Consequently, the need for good prices and forward curves also applies for corporates.

A need for a flexible modelling paradigm and derived data

Any data management system that caters for commodity forward curves should not only be able to show the (cor)relations between different elements of a complex, but should also be able to impose a data QA process and manipulation on forward curves. This may include the following:

- Detecting or correcting seasonality. Seasonality effects differ between products. For example, WTI has no seasonality, whereas heating oil exhibits seasonality and electricity has two seasonality factors during a year. Historical temperature data may need to be contrasted with an observed forward price curve.

- Deriving data from primary and daughter commodities such as the calculation of different spreads (for example, crack spread, dark spread, spark spread and unit of measurement conversions such as rebasing prices from a CAD/Gigajoule to a USD/million British Thermal Units basis).

- Different data representations may be necessary for different purposes so flexibility in viewing data is required. For example, in some cases a forward curve should be presented with maturity expressed as actual calendar days and in other cases as different offsets from today's date. (1M, 2M, 6M, 1Y, 2Y, and so on). Another example would be different representations of volatility smiles and surfaces in delta points or in absolute dollar terms.

- Validation functions that operate on the data may check for day-to-day consistency, and may also check the curve at time T against the curve at time T-1 in terms of %change, absolute shift, expected jump of long end versus observed jump in short end, etc.

- A capability to compare data from different sources. Examples of this case would be to compare the WTI curve built out of trader assessments against the WTI constant maturity futures curve built out of New York Mercantile Exchange (Nymex) futures. Another example of this case would be to compare the Nymex curve versus the IntercontinentalExchange (ICE) curve: the ability to detect if anything significant happened during ICE close and Nymex close is imperative prior to using the curve.

AC PriceMaster

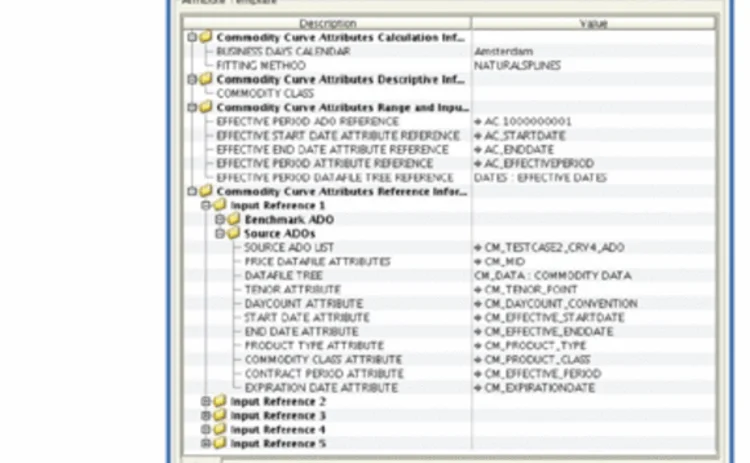

The AC PriceMaster solution provides an integrated and modular approach to price data management of all asset classes including structured products and commodities. Features include the following:

- Off-the-shelf interfaces to real-time gateways, such as Bloomberg, Telekurs, Financial Times Interactive Data, Reuters and broker pages.

- Price consolidation to standardise and aggregate multiple sources. Business rules - customised to product, geography and application - specify sources, time windows and aggregation policies.

- Library of validation rules, such as checks on calendar, consistency checks, comparisons against a benchmark or against historical return or volatility or checks within, for instance, a curve or a smile to detect slope changes or humps.

- Automated workflow for routing suspect items to the relevant specialist or department. Extensive role and object-based security for optimal reliability.

- Curve library to generate secondary data. This includes commodity forward curve generation based on source data such as future outrights, spreads on future outrights, Platts spreads and swaps.

- End-user desktops for research, visualisation and ad hoc analysis. Rich charting functionality with options to slice and dice the data any way you want, ideal to investigate market anomalies and to track risk factors.

- Complete audit trail to determine who changed or approved what and at what time, combined with granular data permissioning and role-based security. This means it is possible to determine very clearly what users can and cannot do as well as which data sets they can see and touch.

- Insight into the complete data lineage that shows the end curve or price result together with the constituent sources.

- Access rights to the attribute level and a status code for each field in a quote indicating profit and loss grade, risk grade, billing/invoicing grade, reason for being suspect, interpolated value, and so on.

The overall benefits of the AC PriceMaster prices and curves system for different departments in an institution can be summarised as shown in Table A.

Our overall approach to data management and how we provide an integrated solution

All Asset Control solutions share the underlying AC Plus data architecture. AC Plus takes in feeds from different data vendors in the original format and then maps them to a common standard so that different sources can be cross-validated and combined with internal data. All feed handlers are maintained by Asset Control in close co-operation with the content providers. This standardisation exercise can entail the application of thousands of normalisation and cross-validation rules, which are packaged with the off-the-shelf interfaces. This is a major operational risk mitigant in data aggregation and integration.

Extensive business rules can automate and reduce manual involvement in tasks of checking, matching and reconciling suspect data, while formulated routines automate a significant percentage of exception handling.

In the multisourced Consolidation Model (also known as the 'golden copy' model) the values are derived from the original vendor or internal data. How consolidation takes place, which attributes are chosen, which values to accept and when to flag a value as suspect are defined by rules attached to the Consolidation Model.

The golden copy datamodel provides for a comprehensive coverage of asset classes, ranging from listed securities to over-the-counter derivatives and structured products.

The progressive data modelling methodology of AC Plus delivers full transparency and full control of the data. Feed handlers source internal and external data using pre-built data vendor interfaces.

From the raw vendor model to a consolidation model based on cross-vendor normalisation, all information at every step is kept available in the database. The Notifier, the system's publishing function, provides full control over what data is going out and where it is going. Data can be accessed via the end-user tools, via Excel and Java Message Service or can be published out real-time or in batches.

Conclusion - let us take the risk out of your data

Asset Control provides tailored products that help institutions take the risk out of their data management. We provide modular solutions that enable our customers' data management platforms to expand in coverage and functionality, as their institutional needs grow. Asset Control's modular products - built with the input of dozens of clients and hundreds of users worldwide - provide structured and integrated solutions that turn the data nightmare into a good night's sleep.

Commodities have become an integrated product line in many institutions. Regulatory demands, plus the unique characteristics of this asset class, make a solid yet flexible prices and curves data management solution essential. Industrial-strength processing and data standards relating to identification will likely spread to commodities. Moreover, a solid and integrated data store also allows institutions to take a comprehensive approach to the market and to do multicommodity arbitrage, such as in energy, between emissions, freight and energy and weather derivatives. In addition, the interplay between commodities and financial markets risk factors - from currency and interest rates to equity prices of mining and energy companies - can also be exposed. For this, a full and trustworthy set of market data is required. Data only becomes valuable information as it undergoes a QA process.

We invite you to visit our website, to read our white papers, to contact us for additional information or - best of all - to visit our clients to see how they have taken the risk out of their data.

- Martijn Groot is head of product management at Asset Control, where he oversees product direction and product quality. Prior to Asset Control, he worked at ABN AMRO Amsterdam, where he held a variety of positions within technology and risk management. He holds an MBA from Insead, an MSc in mathematics from the Free University, Amsterdam and is a certified financial risk manager from the Global Association of Risk Professionals.

Contacts Europe: Todd van Biezen

T: +31 512 389 100

US: Joanne Ferrara

T: +1 212 445 1076.

Sponsored content

Copyright Infopro Digital Limited. All rights reserved.

You may share this content using our article tools. Printing this content is for the sole use of the Authorised User (named subscriber), as outlined in our terms and conditions - https://www.infopro-insight.com/terms-conditions/insight-subscriptions/

If you would like to purchase additional rights please email info@risk.net

Copyright Infopro Digital Limited. All rights reserved.

You may share this content using our article tools. Copying this content is for the sole use of the Authorised User (named subscriber), as outlined in our terms and conditions - https://www.infopro-insight.com/terms-conditions/insight-subscriptions/

If you would like to purchase additional rights please email info@risk.net